Hey fam I am really excited to share the latest with Rover. I’m a little behind on my youtube releases so the next video won’t mention this yet, but I got new cameras for Rover!

This is the camera system:

https://www.e-consystems.com/nvidia-cameras/jetson-agx-xavier-cameras/four-synchronized-4k-cameras.asp#module-features

It’s four 4k cameras with hardware synchronized shutters and the whole system can stream simultaneously at 4k 30fps for each camera! I’m actually going to be able to incorporate video from Rover’s point of view in my videos which I’m stoked about!

The point of these cameras is to finally give Rover a suitable sensor system for autonomous driving. I’ve worked with lidar before and they are very expensive sensors. I’ve long been interested in doing more with only cameras, and in fact I built Rover specifically so I could explore camera-based outdoor navigation. I’m finally getting there!

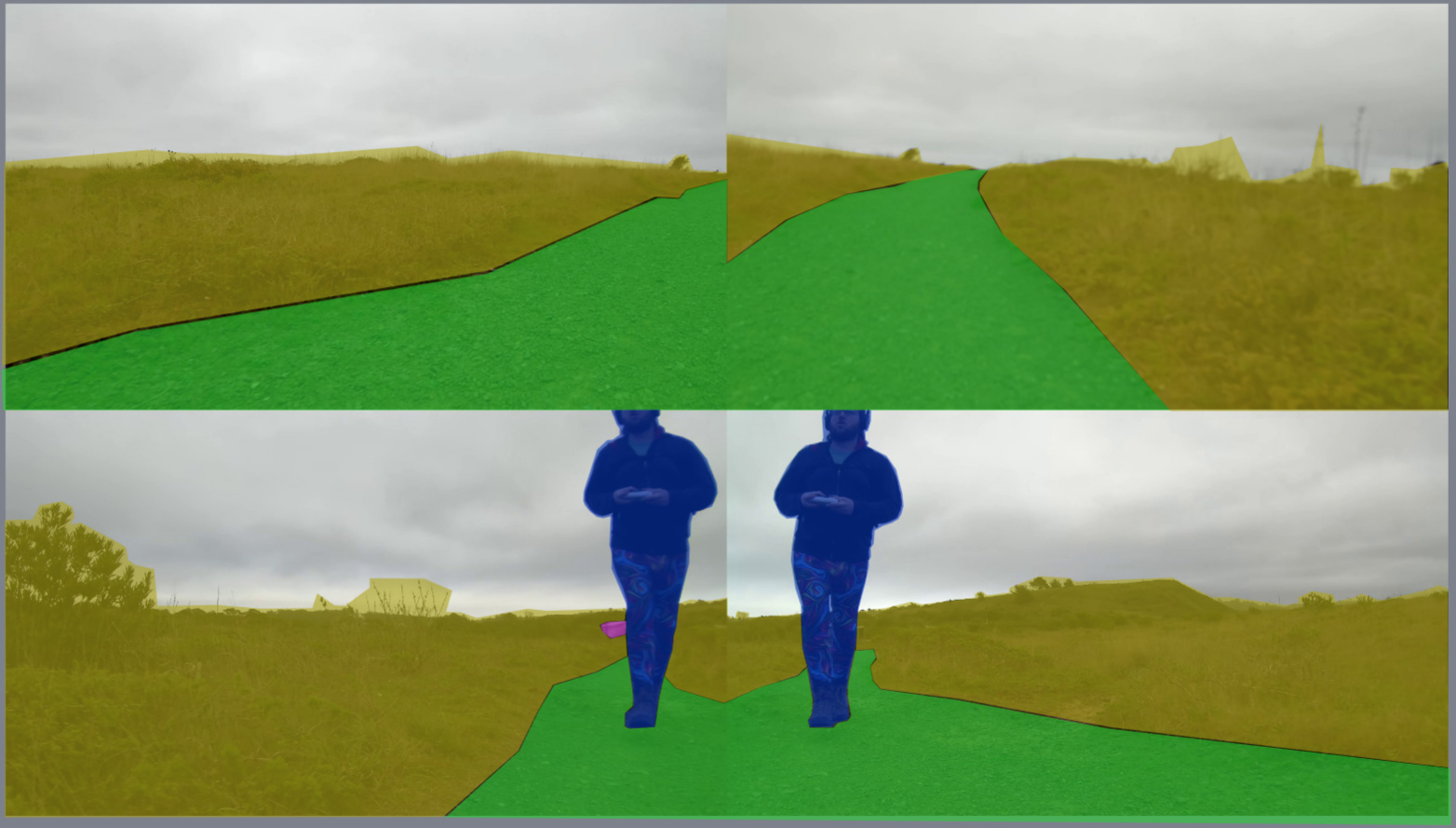

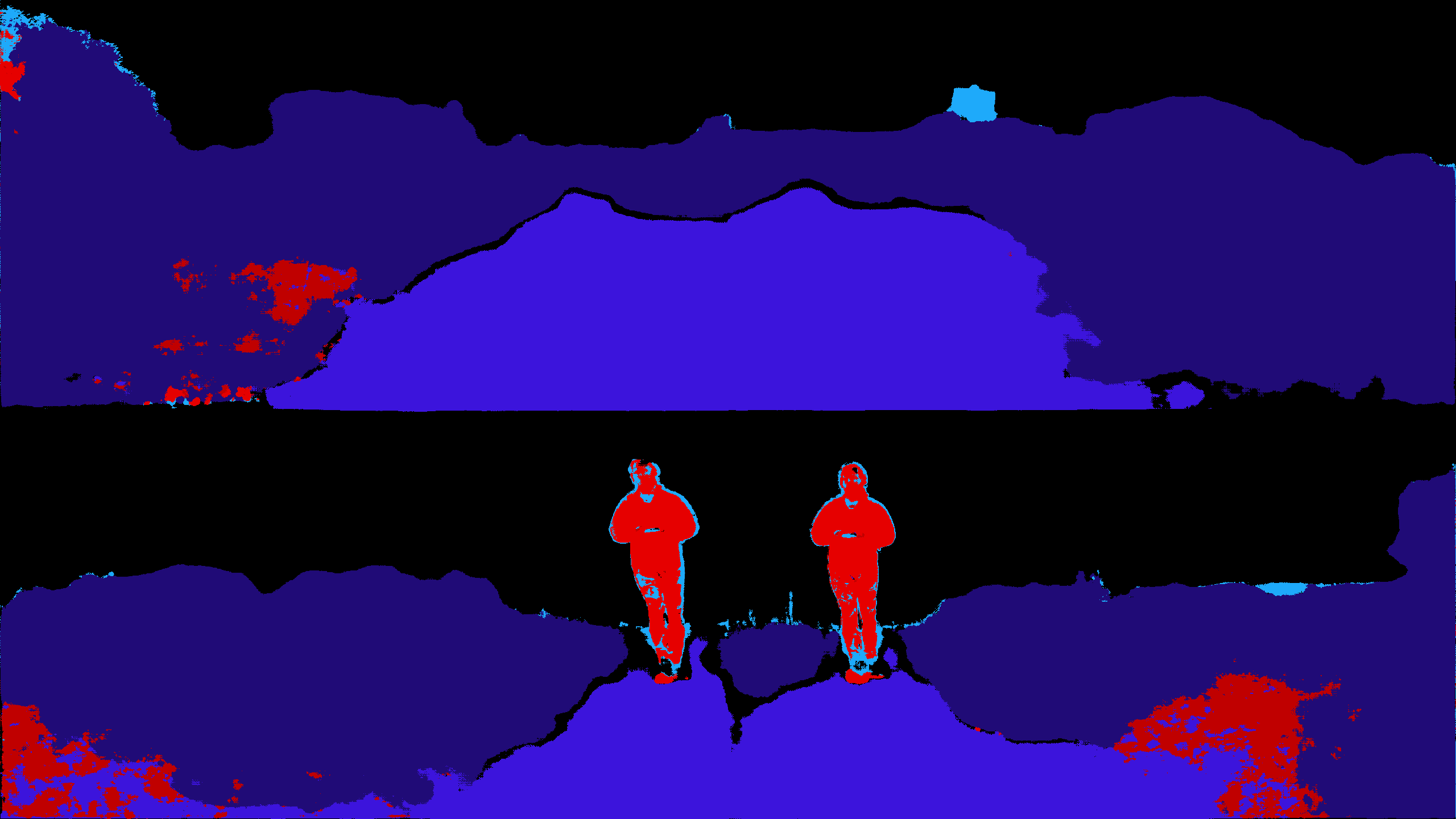

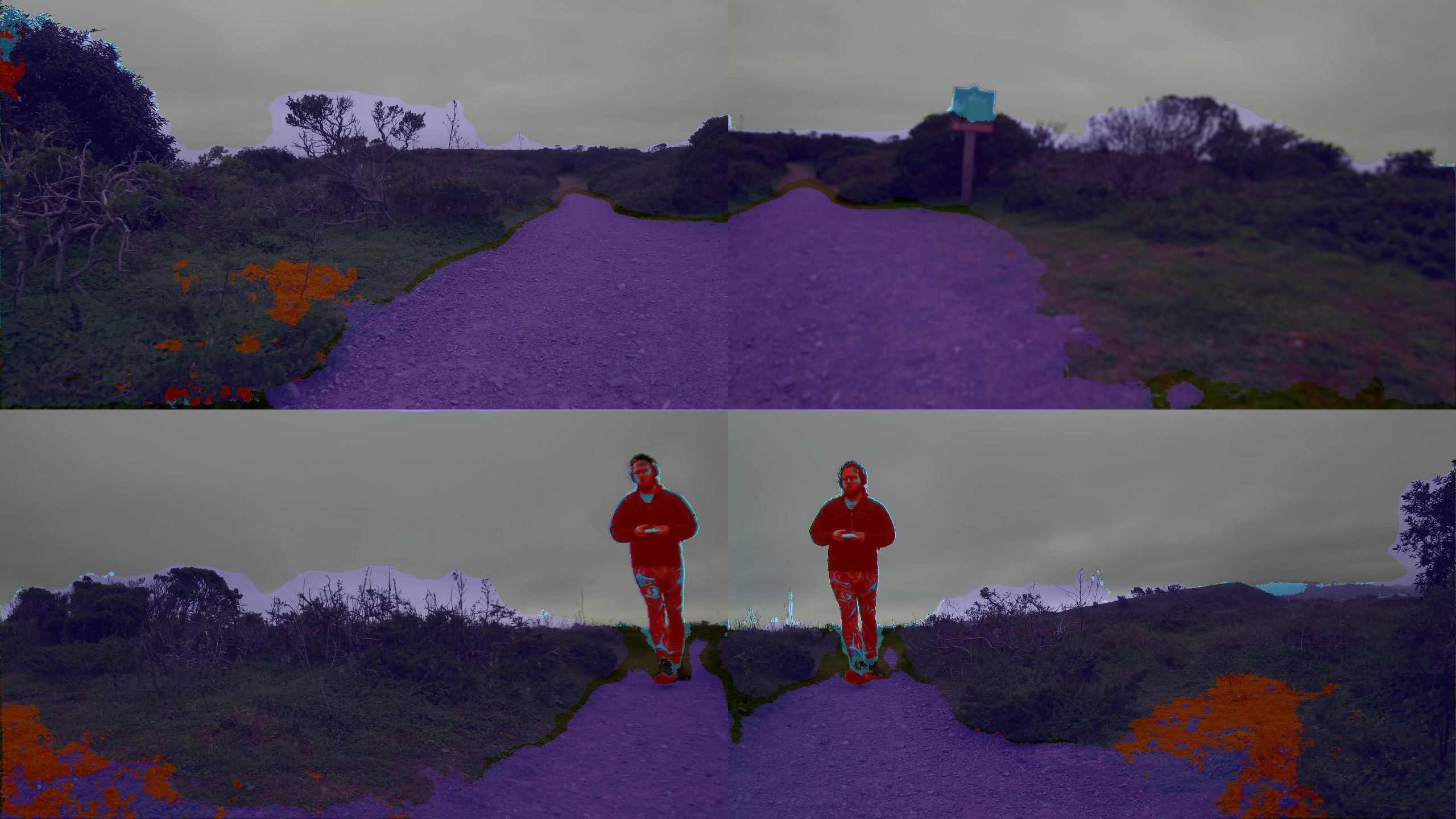

Below is a composite image from Rover’s two front cameras. To really appreciate the resolution, download the image or open it in a new window and zoom in! Here is a direct link to the image.

The cameras are positioned so they have only a little bit of stereo overlap front and rear. That stereo can be used to assist the algorithms which will learn structure from motion using algorithms like the types you’d see here:

And here’s Rover looking might fine!

Images licensed CC0 so do what you want with them.

For now I need to do some basic camera calibration and start to get a pipeline going. In time I will share a video with more details but I wanted to share a couple of awesome images I’ve gotten so far.

Love yall.

Taylor